Hello. According to this article efficient gaussian blur with linear sampling it is better to reduce the number of cycles in the gaussian blur fragment shader by using bilinear interpolation.

I did some experiments and it is indeed better but only if framebuffer texture format is not wide. I have big performance improvement(about 25%) if i use GL_RGB16F texture format with such approach. But when i use GL_RGB32F than performance drops to about same 25%. Could someone comment on that?

I experiment on nvidia p1000 video card.

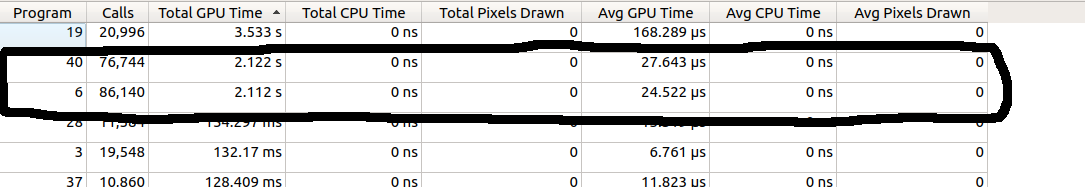

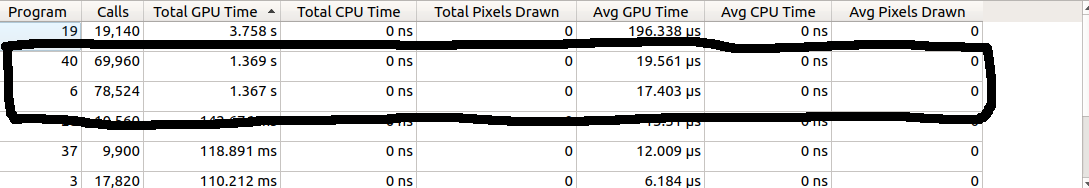

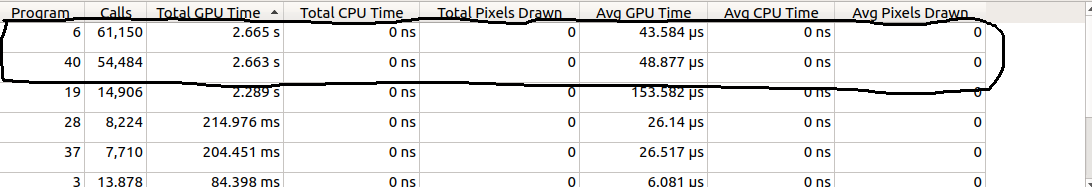

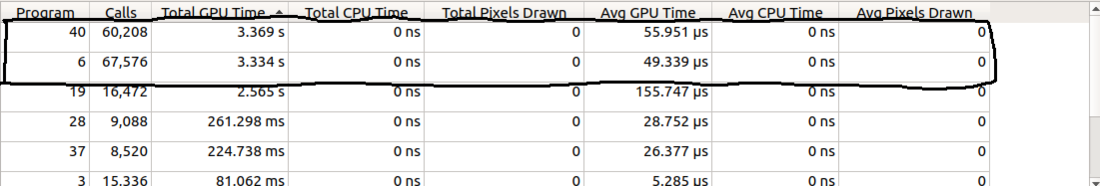

BTW i use apitrace to see performance difference of specific shader program.