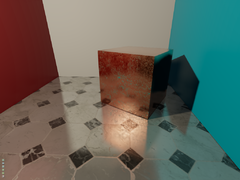

The aim of this project is to create a renderer which almost matches Path Tracing in quality, while generating noiseless images hundreds times faster. Instead of tracing thousands of rays per pixel to get soft shadows and diffuse reflections, it traces a single ray per pixel and uses smart blur techniques in screen space. It also uses standard Deferred Rendering for rasterizing main image. One of the project's fundamental rules is that everything is dynamic (no pre-baked data).

Current implementation of ray tracing is very slow, with significant fixed cost per object. Performance will be dramatically improved after switching graphics API to DirectX12. The switch is needed to randomly access meshes from within the shader, rather than processing one by one as it is now. Despite that, image processing of shadows and reflections/refractions is pretty fast, which allows for real-time operation in small/medium sized scenes.

I'm certain that most operations could be speed-up 2-10x if some more work was put into it.

In development since 2014, 1500+ hours of work.

Differences from NVIDIA RTX demos (such as SVGF - Spatiotemporal Variance-Guided Filtering):

- engine doesn't shoot rays in random directions, but rather offsets them slightly based on pixel position in image-space. This results in zero temporal noise.

- engine traces rays one layer (bounce) at a time and then stores results in render targets, which allows blurring of each light bounce separately. NVIDIA traces all bounces at once and then blurs the result.

- engine doesn't use temporal accumulation of samples, although it could be added for extra quality.

- NVIDIA demos analyze noise patterns to deduce how much blur needs to be applied at given screen region, I deduce this information from distance to the nearest occluder (for shadows) or nearest reflected/refracted object. It gives temporally stable results and saves some processing time (couple of ms per frame for NVIDIA), but introduces own set of challenges.

Working features:

• Deferred Rendering

• Light reflections/refractions

· Locally correct

· Fully dynamic

· Diffuse - for rough surfaces

· Multiple refractions/reflections

· Blur level depanding on surface to object distance

• Shadows

· Fully dynamic

· Soft - depanding on light source radius, distance to occluder

· "Unfinite " precision - tiny/huge objects cast proper soft shadows, light source can be 1000 km away

· Visible in reflections/refractions

· Correct shadows from transparent objects - depanding on alpha texture

• Physically Based Rendering - only one material, which supports all effects

• Loading/saving scenes, animations, meshes, generating BVH trees

• Tonemapping, bloom, FXAA, ASSAO (from Intel)

Future plans:

• Switching from DX11 do DX12 - to allow for indexing meshes inside shaders, support for huge scenes

• Shadows for non-overlapping lights can be calculated in a single step! Amazing potential for rendering huge scenes.

• Adjustable visual quality through tracing a few more rays per pixel - like 9, reducing the need for heavy blur

• Dynamic Global Illumination

• Support for skeletal animation

• Support for arbitrary animation - ex. based on physics - cloths, fluids

• Dynamic caustics

• Volumetric effects, patricles - smoke, fire, clouds

• Creating a sandbox game for testing