AhmedSaleh said:

Thanks for your information. So for the approach for removing iterating over the triangles. I think about something and tell me if it's correct or no. I make a kernel, enqueue it, get all the bounding boxes store them in out variable buffer run another kernel with input all bounding boxes, start the scanning 2D from enqueue from the bounding boxes limits Is that correct ? but how to chose which bounding box size and offset to let the second kernel go to it and draw

I guess you mean the approach i proposed (sorting by area), but it's not clear to me what you ask for.

In practice we get more kernels than two. I'll write some pseudo code.

// 1st kernel; input: all triangles of the scene; output: buffer at the size of all triangles

for each triangle

{

transform vertices, frustum and backface culling.

If (survives)

{

CalculateClippedBoundingRectangle(triangle);

uint key = (retangleArea<<32) | triangleIndex;

buffer.Append(key);

}

}

// 2nd kernel: Sort the buffer, e.g. using radix sort.

// 3rd kernel: for each remaining and sorted triangle, rasterize it from one thread using atomics to framebuffer

There is no need to cache the bounding rectangle, although you can of course, e.g. if the rasterizer needs it. We could also cache transformed vertices or have an initial kernel which does this just once per vertex.

With such caching, we decrease ALU at the cost of increasing bandwidth. Probably not worth it.

We only want to process the triangles in sorted order, so all threads in a workgroup have a workload of similar size.

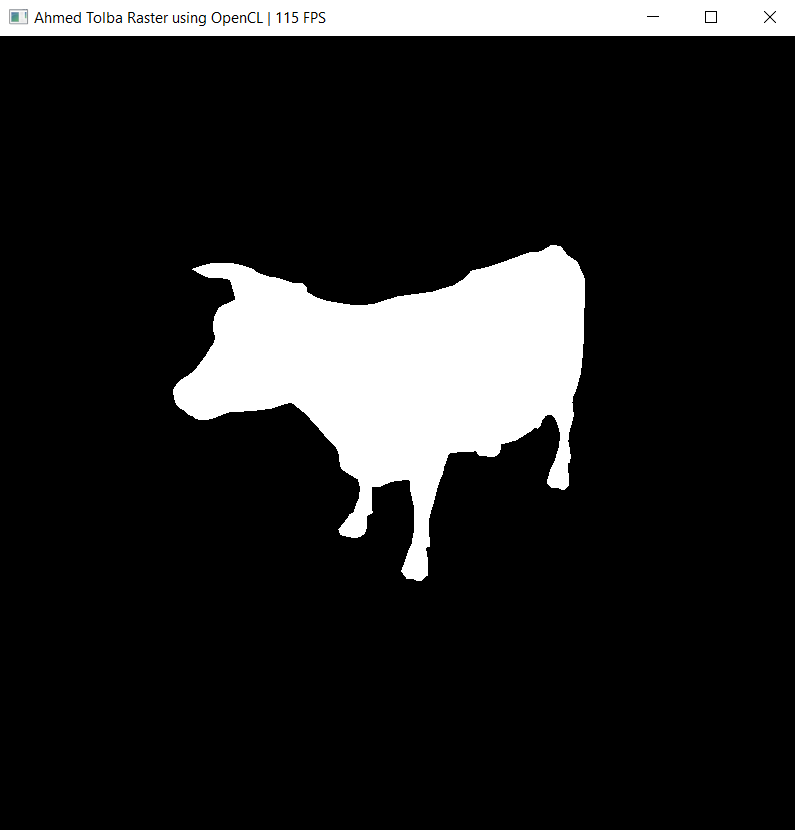

But if all triangles already are at about the same projected size like in your initial picture, my proposed optimization is not worth it. We need a complex scene with close up and distant stuff.

And we need enough triangles to saturate the GPU on per thread rasterization.

If we have few very large triangles in the scene, that's still bad. E.g. if a triangle covers the entire screen, a single thread fills the entire screen without any parallelization.

For such cases, your initial approach would be better. So you could keep it and use it for the last triangles in the sorted list, eventually.